1. NVIDIA H200 GPU

NVIDIA H200 is a strong accelerator that uses Hopper architecture. It is built to handle High-Performance Computing (HPC), Large language models (LLMs), and Artificial Intelligence (AI) workloads. It delivers 141 GB of HBM3e memory and 4.8 TB/s of bandwidth and can consume up to 700W of electricity. This thing makes it much faster than the H100 model. It is available in 2 models, H200 SXM and H200 NVL PCIe. They are designed for different data center configurations and cooling methods.

2. 💻 NVIDIA H200 GPU Specifications Comparison

This table compares the key technical specs of the NVIDIA H200 SXM and the NVIDIA H200 NVL PCIe variants.

| Specification | NVIDIA H200 SXM | NVIDIA H200 NVL PCIe |

|---|---|---|

| Physical Design | SXM5 Form Factor. | Double-Width (DW) PCIe. |

| GPU Power (TDP / EDPC) | 700 Watts (W). | 600 Watts (W). |

| Memory Capacity | 141 GB HBM3e. | 141 GB HBM3e. |

| Memory Bandwidth | 4.8 Terabytes per second (TB/s). | 4.8 Terabytes per second (TB/s). |

| Interconnection | NVLink: 900 GB/s, PCIe Gen5: 128 GB/s. | NVLink: 900 GB/s, PCIe Gen5: 128 GB/s. |

| Multi-Instance GPUs (MIGs) | Up to 7 MIGs @ 18 GB each. | Up to 7 MIGs @ 16.5 GB each. |

| FP64 Performance | 34 teraFLOPS. | 30 teraFLOPS. |

| FP32 Performance | 67 teraFLOPS. | 60 teraFLOPS. |

| FP64 Tensor Core | 67 teraFLOPS. | 60 teraFLOPS. |

| TF32 Tensor Core | 495 / 989 teraFLOPS*. | 418 / 835 teraFLOPS*. |

| BFLOAT16 Tensor Core | 990 / 1,979 teraFLOPS*. | 836 / 1,671 teraFLOPS*. |

| FP16 Tensor Core | 990 / 1,979 teraFLOPS*. | 836 / 1,671 teraFLOPS*. |

| FP8 Tensor Core | 1,979 / 3,958 teraFLOPS*. | 1,570 / 3,341 teraFLOPS*. |

| INT8 Tensor Core | 1,979 / 3,958 TOPS*. | 1,570 / 3,341 TOPS*. |

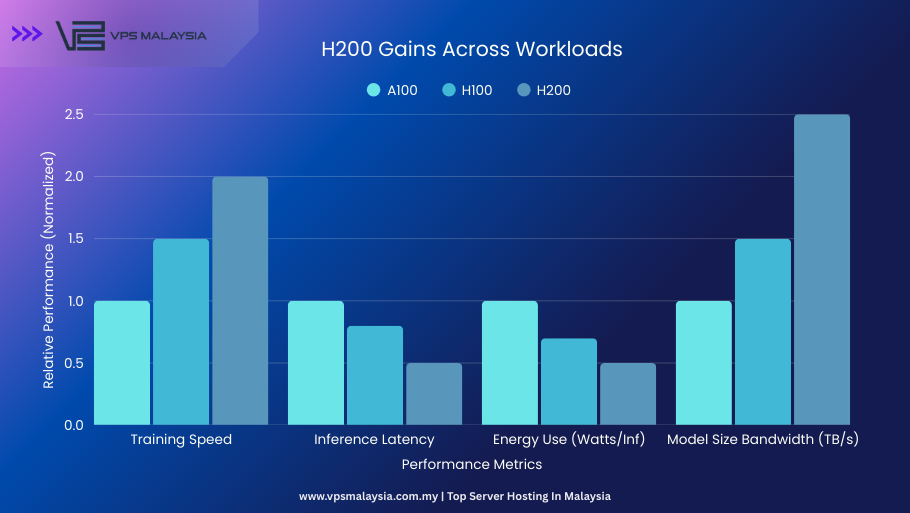

2. Game-Changing Features of NVIDIA H200

A. Enhanced AI Inference

AI interference is a process that makes decisions and predictions on new data by using trained AI models. For efficient AI interference, a reliable platform is crucial.

That’s why NVIDIA H200 comes in. It delivers top performance at a very low cost. It uses 50% less energy per interference as compared to H100. Its integrated Transformer Engine is optimized for lower-precision (FP8) computation. FP8 needs small numbers and uses less power to operate.

It can easily run advanced models like DeepSeek-R1 (671B parameters), GPT-3 175B and Successors, Llama 2 70B and Llama 3 70B/3.1 405B, and Falcon 180B. It delivers quick output for applications like code generation.

| Feature | What It Does | Why It Matters |

|---|---|---|

| 141 GB HBM3e VRAM | Holds massive AI models (175B+ parameters) entirely in memory. | No more shuffling data around. This eliminates delays and keeps things running smoothly. |

| 4.8 TB/s Memory Bandwidth | Gets data to the processors 2.4x faster than the previous H100. | Drastically cuts down wait times. You get faster, more responsive AI, especially for large language models. |

| FP8 Precision Support | Uses a more efficient number format for calculations without losing accuracy. | Doubles the processing speed compared to older methods, saving both money and energy on every task. |

| Transformer Engine | Automatically optimizes the model’s core operations on the fly. | Delivers a major speed boost for generative AI tasks like writing and creating content. |

| Advanced Cooling Design | Keeps the system cool and stable while running at maximum performance. | Ensures the hardware is reliable and lasts longer, preventing slowdowns from overheating. |

B. Multi-Instance GPU (MIG)

Multi-Instance GPU (MIG) is a hardware-level technology. It allows a single physical GPU to be divided into 7 GPUs. Now, there will be;

- 7 virtual independent GPUs and now each of them (known as the 1g.5gb profile for the 40GB A100 or 1g.10gb for the 80GB A100) will have these specifications:

- A100 40GB → 1g.5gb profile = 5 GB GPU memory.

- A100 80GB → 1g.10gb profile = 10 GB GPU memory.

- Each instance will get 12 SMs (streaming multiprocessors) out of the A100’s 84 active SMs (or 14 out of 98 on the H100 GPU).

- For the 80GB A100 (which has ~2039 GB/s total bandwidth), each instance receives around 291 GB/s of dedicated bandwidth.

- Each MIG will have its own SMs (Streaming Multiprocessors), caches, and GPU memory.

- If one instance fails, it does not affect others due to fault isolation.

- Offers predictable low latency and consistent performance for different workloads.

This flexibility makes the H200 with MIG perfect for modern computing environments. It is the best for virtualization applications and container platforms like Kubernetes.

C. Multi-Precision Computing

Multi-Precision computing means using various numerical formats like (FP64, FP32, FP16, INT8) in a single calculation to balance accuracy. It uses hardware support for lower precision (16-bit) for speed and memory saving. It uses the following precision:

- FP64 (Double Precision): High accuracy, standard for scientific simulation.

- FP32 (Single Precision): Good balance, common in many applications.

- FP16 (Half Precision), BF16, INT8/INT4: Faster, use less memory, but introduce rounding errors (quantization).

H200 GPU can switch between different calculation modes like FP64, FP32, FP16, INT8, etc, on the fly. So, you can choose the perfect balance of speed and precision for each job.

D. Optimize Generative AI Fine-Tuning Performance

Generative AI Fine-Tuning means adopting a large pre-trained model like LLM and further training it on small, specific datasets to improve its performance. We align its output like text, images, etc, with specific tasks or domains to save time and resources.

You can customize large AI models to fit your business needs. Techniques like fine-tuning, LoRA, and RAG adapt general models into specialized tools. This turns broad AI capabilities into targeted solutions for your work.

NVIDIA H200 Transformer Engine and latest Tensor Cores accelerate this task 5.5 times faster than traditional GPUs. It means businesses can quickly create and launch AI models. This thing saves time and training while gaining a powerful AI tool.

E. Reduced Energy and Total Cost of Ownership (TCO)

In today’s world, saving energy and building a sustainable future are top priorities for every business. This is where accelerated computing comes in. It saves energy and overall cost for tasks like HPC and AI.

The NVIDIA H200 takes this to the next level. It delivers higher performance than H100 and consumes less power. This means large-scale AI factories can now be powerful and environmentally responsible.

This isn’t just good for the planet—it’s great for business. For major deployments, H200 systems offer 5 times more energy savings and 4 times better total cost of ownership than systems using the previous-generation Ampere architecture. It’s a smart choice that moves both industry and science forward.

3. Real-World Applications of the NVIDIA H200

A. Accelerating LLM Development

The H200 drastically cuts down the time needed to build and run large language models. It handles massive models like GPT-4 with ease. Now, businesses can get their AI applications to market much faster.

B. Transforming Vision and Multimodal AI

For AI that “sees” and understands images, the H200 is a game-changer. It quickly links images to text, making product search or medical imaging faster. It can process different types of data in real-time for accurate results.

C. Enterprise and Cloud Infrastructure

The NVIDIA H200 is the engine behind modern AI infrastructure. It’s used both in corporate data centers and by leading cloud platforms like AWS, Azure, and Google Cloud to power their AI services.

D. AI Factories

Large companies are using H200 systems like the NVIDIA DGX H200 to build what are called “AI factories.” These are powerful, centralized platforms that handle every step of AI development—from processing data to running finished models—all on an industrial scale.

E. Confidential Computing

Security is built into the H200 at the hardware level. During processing, it keeps sensitive data safe due to full memory encryption. This is helpful for companies like healthcare and finance with strict privacy policies.

F. Digital Twins

H200 drives simulations in platforms like NVIDIA Omniverse. It means you can create digital copies of real-world systems like factories or power grids. This helps companies to test and improve systems in virtual environments before making any changes in the real world.

G. Enhancing Fraud Detection Systems

Banks, cybersecurity teams, and auditors have to analyze a huge volume of complex transactions to find suspicious data. H200 can speed up a fraud detection system. So that fraud is detected and stopped before it causes any damage.

H. Pioneering Scientific Research

The H200 pushes the boundaries of scientific discovery. Researchers can run detailed simulations much faster. Fields like genomics, climate science, and astrophysics can achieve notable successes sooner.

4. Conclusion

The NVIDIA H200 Tensor Core GPU represents more than an incremental upgrade; it is the definitive shift toward AI Infrastructure 2.0. As models evolve toward trillion-parameter scales, the bottleneck has shifted from raw compute to memory bandwidth. By integrating 141GB of HBM3e memory and delivering 4.8TB/s of bandwidth, the H200 solves the “memory wall” that hampers legacy systems.

For the modern enterprise, clinging to legacy GPUs is no longer just a technical debt—it is a financial one as well. Attempting to run real-time generative AI or massive synthetic simulations on outdated architecture results in compounding costs and stalled innovation. In contrast, the H200 provides a unified foundation that delivers:

- Optimized TCO: Nearly 50% lower energy consumption compared to previous generations for equivalent workloads.

- Massive Throughput: Up to 2x faster inference performance for LLMs like Llama 3 or GPT-4.

- Hybrid Agility: A seamless fabric for scaling across on-premise data centers and cloud environments.

The velocity of AI innovation is unforgiving. To move from experimental “islands of AI” to industrial-grade execution, leaders must prioritize high-bandwidth, energy-efficient architectures. The NVIDIA H200 isn’t just a component; it is the engine of the next competitive era.

Leave a Reply